Python爬虫获取整个站点中的所有外部链接代码示例

作者:土肥圆的猿 时间:2021-03-01 09:52:39

收集所有外部链接的网站爬虫程序流程图

下例是爬取本站python绘制条形图方法代码详解的实例,大家可以参考下。

完整代码:

#! /usr/bin/env python

#coding=utf-8

import urllib2

from bs4 import BeautifulSoup

import re

import datetime

import random

pages=set()

random.seed(datetime.datetime.now())

#Retrieves a list of all Internal links found on a page

def getInternalLinks(bsObj, includeUrl):

internalLinks = []

#Finds all links that begin with a "/"

for link in bsObj.findAll("a", href=re.compile("^(/|.*"+includeUrl+")")):

if link.attrs['href'] is not None:

if link.attrs['href'] not in internalLinks:

internalLinks.append(link.attrs['href'])

return internalLinks

#Retrieves a list of all external links found on a page

def getExternalLinks(bsObj, excludeUrl):

externalLinks = []

#Finds all links that start with "http" or "www" that do

#not contain the current URL

for link in bsObj.findAll("a",

href=re.compile("^(http|www)((?!"+excludeUrl+").)*$")):

if link.attrs['href'] is not None:

if link.attrs['href'] not in externalLinks:

externalLinks.append(link.attrs['href'])

return externalLinks

def splitAddress(address):

addressParts = address.replace("http://", "").split("/")

return addressParts

def getRandomExternalLink(startingPage):

html= urllib2.urlopen(startingPage)

bsObj = BeautifulSoup(html)

externalLinks = getExternalLinks(bsObj, splitAddress(startingPage)[0])

if len(externalLinks) == 0:

internalLinks = getInternalLinks(startingPage)

return internalLinks[random.randint(0, len(internalLinks)-1)]

else:

return externalLinks[random.randint(0, len(externalLinks)-1)]

def followExternalOnly(startingSite):

externalLink=getRandomExternalLink("https://www.jb51.net/article/130968.htm")

print("Random external link is: "+externalLink)

followExternalOnly(externalLink)

#Collects a list of all external URLs found on the site

allExtLinks=set()

allIntLinks=set()

def getAllExternalLinks(siteUrl):

html=urllib2.urlopen(siteUrl)

bsObj=BeautifulSoup(html)

internalLinks = getInternalLinks(bsObj,splitAddress(siteUrl)[0])

externalLinks = getExternalLinks(bsObj,splitAddress(siteUrl)[0])

for link in externalLinks:

if link not in allExtLinks:

allExtLinks.add(link)

print(link)

for link in internalLinks:

if link not in allIntLinks:

print("About to get link:"+link)

allIntLinks.add(link)

getAllExternalLinks(link)

getAllExternalLinks("https://www.jb51.net/article/130968.htm")

爬取结果如下:

来源:http://blog.csdn.net/qq_16103331/article/details/52690558

标签:python,爬虫

猜你喜欢

Python并发之多进程的方法实例代码

2022-04-13 12:43:54

基于vue.js路由参数的实例讲解——简单易懂

2024-05-29 22:48:25

go语言中函数与方法介绍

2024-04-23 09:34:13

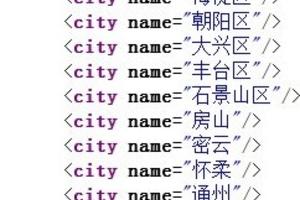

python解析xml文件实例分享

2021-11-20 07:56:14

PHP children()函数讲解

2023-06-13 04:38:38

go语言中[]*int和*[]int的具体使用

2024-05-29 22:08:38

vue单页面在微信下只能分享落地页的解决方案

2024-05-09 10:52:19

python委派生成器的具体方法

2022-06-14 01:23:39

阿里巴巴技术文章分享 Javascript继承机制的实现

2024-04-30 09:59:16

SQL语句实例说明 方便学习mysql的朋友

2012-11-30 20:02:43

MySql中的longtext字段的返回问题及解决

2024-01-12 23:32:41

详解Golang中字符串的使用

2024-04-28 09:16:35

PHP中PDO基础教程 入门级

2023-11-14 16:34:39

python密码学各种加密模块教程

2021-03-10 05:32:55

js取得当前网址

2024-04-10 11:03:14

perl文件读取的几种处理方式小结

2023-03-03 20:36:43

微信小程序实现分页加载效果

2024-06-15 03:33:57

python开根号实例讲解

2022-10-03 12:29:07

Python3使用腾讯云文字识别(腾讯OCR)提取图片中的文字内容实例详解

2023-11-16 22:45:05

从多个tfrecord文件中无限读取文件的例子

2023-10-23 13:29:19